- 22nd May, 2025

- Arjun S.

Understanding Transformer Architecture and Its Role in ChatGPT

12th Sep, 2023 | Manan M.

- Artificial Intelligence

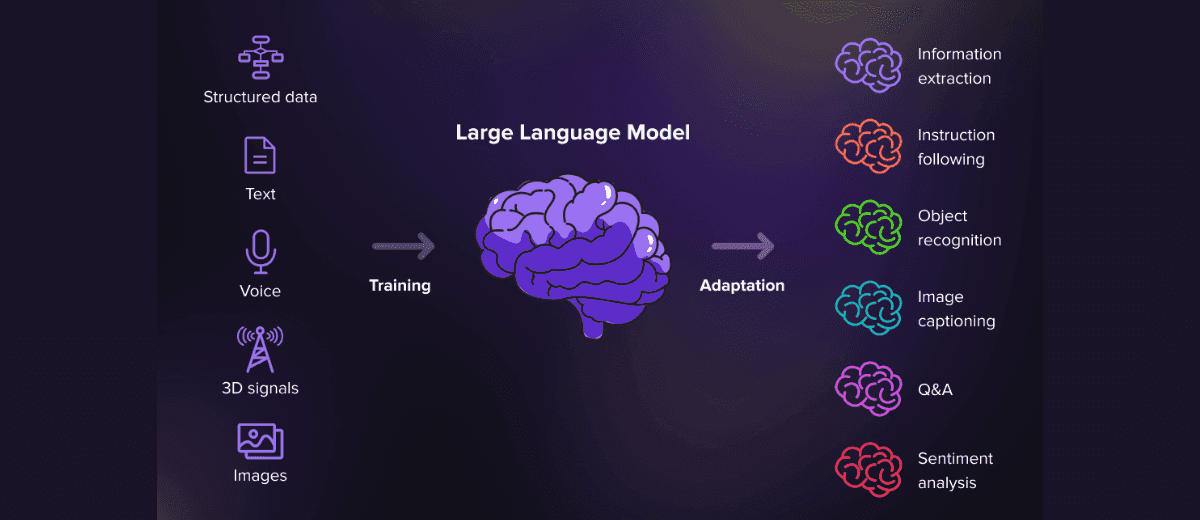

Image Source: serokell.io

Introduction

We are so fascinated by ChatGPT and its applications, like how it simplifies our lives, from simple tasks like writing emails to complex tasks like debugging a code to find errors.

Have you ever wondered what is the underlying architecture of ChatGPT and similar models like BARD make them such powerful large language models?

These models are all based on the architecture of the Transformer as described in the paper, "Attention is all you need".

Let’s dive deep to understand the main ideas behind Transformer architecture.

Background

Prior to Transformer, recurrent neural networks (RNN) and LSTM (Long Short-Term Memory) networks were the go-to neural networks for natural language processing tasks, but RNN suffer from the problem of vanishing and exploding gradients during training.

Also, one main concern for RNN is they are not able to remember words in longer sentences and forget the context.

To solve this problem, LSTM networks were developed, which could remember important words in a sentence. Still, the training time for LSTM networks is remarkably high due to the sequential natural training of LSTM networks where the input for each cell depends on the output of the previous cell.

Transformer architecture overcomes these problems as it uses a Self-Attention mechanism to remember words for longer sentences and can be trained in parallel, unlike LSTM to takes advantage of GPU, which results in lower training time for Transformer architecture for the same number of parameters.

Architecture of Transformer

The general architecture of Transformer architecture consists of an encoder-decoder network. The first part of the network is an encoder, which is responsible for encoding words into vectors, which are decoded by Transformer to produce output.

Fig 1. Transformer - Encoder and decoder

It is based on 4 layers mainly -

Fig 2. Transformer architecture

1. Word Embeddings

Since neural networks do all computations on numbers, we need to convert input sentences into numbers(vectors) to train Transformer architecture. That is done using word embeddings.

The whole training corpus of words is converted into vectors representing each word using word embeddings such as BERT, GloVe, and word2vec.

2. Positional Encoding

Consider the two sentences mentioned below -

- Transformer are better than LSTM.

- LSTMs are better than Transformer.

Even though the word embeddings for the two of the above sentences are equivalent, the meaning of the above two sentences is completely different due to the relative position of words in the sentence.

So, along with the representation of words as vectors, we also need to take care of the order of the words in a sentence. Positional encoding helps Transformer architecture to take care of the order of words in a sentence.

Positional encoding is added to the word embedding vectors to take into account the order of words in a sentence. Transformer use sine and cosine waveforms with different wavelengths to generate different positional encodings for different words of a sentence and add them to word embeddings.

3. Attention Layers

After adding positional encodings to word embeddings, we get new vectors that reside in the word representation as well as the order of words in a sentence. The main idea of calculating attention is to get the context and importance of each word present in a sentence.

For example, consider the sentence -

- “Transformer are better than LSTM, they can be trained in parallel.”

In the above sentence, to get the context of the word "they" it is referring to, we calculate its attention values with all the words present in the sentence, which gives the Transformer architecture the context that "they" here refers to the word “Transformer” and not “LSTM”.

Transformer use the attention mechanism in three different ways -

- To calculate self-attention in the encoder.

- To calculate encoder-decoder attention.

- To calculate self-attention in the decoder.

4. Residual Connections and Feed-Forward Network

After calculating self-attention values for each word in the sentence, residual connections are made to the self-attention values from the positional encoded vectors so that self-attention values only need to take care of the relevance of each word, not the positional encodings and word embedding along with self-attention values which are then passed to a feed-forward network to predict the output probabilities of each word.

Advantages of Transformer Architecture Over RNN, LSTM

1. Parallelization During Training

Unlike RNN and LSTM networks, where the input of the current cell is dependent on the output of the previous cell, transformer cells are independent of the previous unit output.

Hence, Transformer architecture can be trained in parallel taking use of the high parallel computing power of GPUs. Therefore, the training time for Transformer architecture in comparison to LSTM with the same number of parameters is very low.

2. Transformer Architecture Remembers Long-Term Dependencies

RNN and LSTM networks suffer from forgetfulness problems, it is not possible for them to remember long-range dependencies in a sentence.

Transformer architecture solves this problem by calculating attention values as mentioned above and using these values to understand the context of each word and its importance in input and output sentences.

For shorter sentences, LSTM and RNN can do a fine job. Still, for longer sentences exceeding 64 words, it is hard for LSTM and RNN to remember long-term dependencies, but it is easier for Transformer architecture to remember these long-term dependencies.

References

[1] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin. Attention is All You Need, 2017.

More blogs in "Artificial Intelligence"

- 24th Feb, 2025

- Aanya G.

The Complete Guide to Generative AI Architecture

- 4th Sep, 2025

- Aishwarya Y.

AI Integration in Mobile Apps: What CTOs Need to Know

Join our Newsletter

Get insights on the latest trends in technology and industry, delivered straight to your inbox.