- 27th Mar, 2025

- Sneha V.

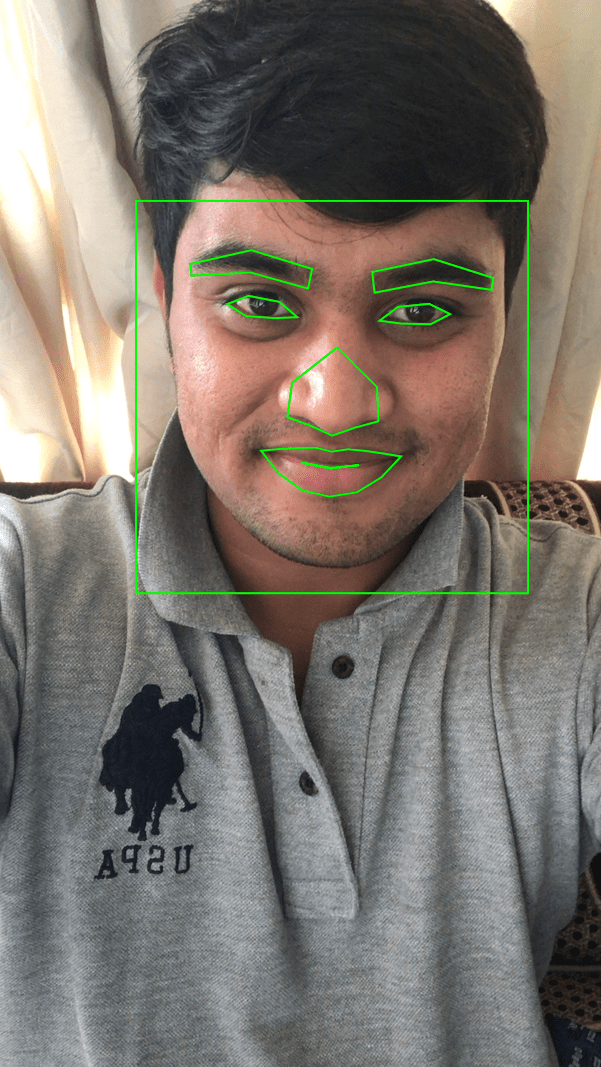

Real-time Face Detection on iOS

16th Jul, 2021 | Hardik D.

- Software Development

In an era dominated by technological advancements, face detection has emerged as a groundbreaking technology, transforming the way we interact with devices and systems.

From enhancing security measures to personalizing user experiences, the applications of face detection are diverse and continually evolving.

What are you going to learn?

We will show you how to detect faces and their features using the Vision Framework in an iOS app. We will receive live frames of the front camera of an iOS device.

Next, we will analyze each frame using the Vision framework’s face detection. After analyzing a particular frame it can detect a face and it’s features.

In this blog, you’ll learn how to use the Vision framework to:

- Create requests for face detection and detecting face landmarks.

- Process these requests and return the results.

- Overlay the results on the camera feed to get real-time face detection.

What is Face Detection?

Face detection is a subset of computer vision, a field of artificial intelligence (AI) that empowers machines to interpret and make decisions based on visual data.

At its core, face detection involves the identification and localization of human faces within images or videos.

This process relies on sophisticated algorithms that analyze facial features, patterns, and contextual information to accurately recognize and differentiate faces from their surroundings.

Face detection just means that a system can identify that there is a human face present in an image or video. For example, Face Detection can be used for auto-focus functionality in cameras.

Why use the Vision Framework for Face Detection?

Vision algorithms are more accurate and less likely to return false positives or negatives. Apple claims that the framework leverages the latest machine learning (deep learning) and computer vision techniques, which have improved results and performance.

The Vision Framework can detect and track rectangles, faces, and other salient objects across a sequence of images.

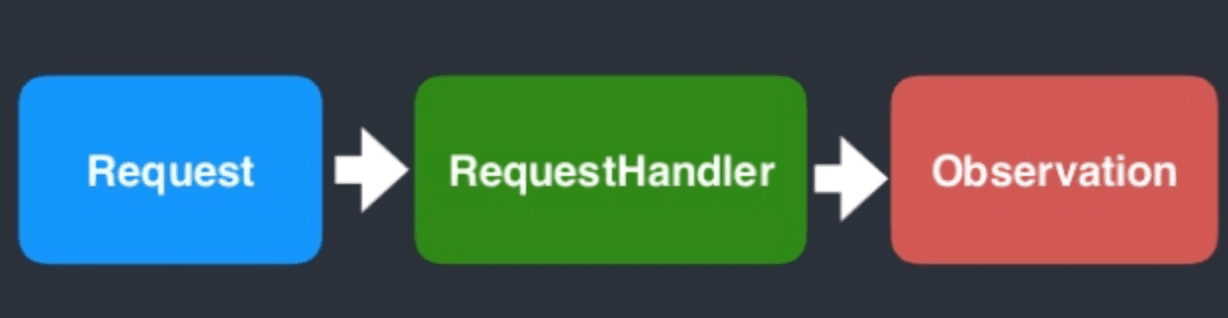

Vision Concept

The Vision framework follows the simple mechanism to obtain computer vision as:

Request, Request Handler, and the Observation of that request.

Let's see the base classes and categories of Vision. Under the roof, there are 3 main class categories:

- VNDetectFaceRectanglesRequest for Face detection.

- VNDetectBarcodesRequest for Barcode detection.

- VNDetectTextRectanglesRequest for Text region.

For iOS face detection just need to implement VNDetectFaceRectanglesRequest.

How to Stream the Front Camera Feed for Face Detection?

In the first step we want to stream the camera feed from the front camera or the back camera to the screen.

Let’s start with adding the camera feed to the ViewController. First we will require access to the front camera.

We will use the AVFoundation framework provided by Apple on the iOS platform to do so. AVFoundation framework allows us to access the camera and facilitates the output of the camera in our desired format for processing.

To gain access to the AVFoundation framework add the following line after Import Foundation in ViewController.swift

import AVFoundation

Now, we create an instance of a class called AVCaptureSession. This class coordinates multiple inputs such as microphone and camera with multiple outputs.

private let captureSession = AVCaptureSession()

Now we are adding the front camera as an input to our CaptureSession. The function starts by fetching the front camera device. Let’s call this function, At the end of ViewDidLoad.

private func addCameraInput() {

guard let device = AVCaptureDevice.DiscoverySession(

deviceTypes: [.builtInWideAngleCamera, .builtInDualCamera, .builtInTrueDepthCamera],

mediaType: .video,

position: .front).devices.first else {

fatalError("No back camera device found, please make sure to run SimpleLaneDetection in an iOS device and not a simulator")

}

let cameraInput = try! AVCaptureDeviceInput(device: device)

self.captureSession.addInput(cameraInput)

}

Now for back camera as an input to CaptureSession, use the following function:

private func addCameraInput() {

guard let device = AVCaptureDevice.DiscoverySession(

deviceTypes: [.builtInWideAngleCamera, .builtInDualCamera, .builtInTrueDepthCamera],

mediaType: .video,

position: .back).devices.first else {

fatalError("No back camera device found, please make sure to run SimpleLaneDetection in an iOS device and not a simulator")

}

let cameraInput = try! AVCaptureDeviceInput(device: device)

self.captureSession.addInput(cameraInput)

}

If you want flip camera button to switch between front and back camera use this following function:

fileprivate func swapCamera() {

// Get current input

guard let input = captureSession.inputs[0] as? AVCaptureDeviceInput else { return }

// Begin new session configuration and defer commit

captureSession.beginConfiguration()

defer { captureSession.commitConfiguration() }

// Create new capture device

var newDevice: AVCaptureDevice?

if input.device.position == .back {

newDevice = captureDevice(with: .front)

} else {

newDevice = captureDevice(with: .back)

}

// Create new capture input

var deviceInput: AVCaptureDeviceInput!

do {

deviceInput = try AVCaptureDeviceInput(device: newDevice!)

} catch let error {

print(error.localizedDescription)

return

}

// Swap capture device inputs

captureSession.removeInput(input)

captureSession.addInput(deviceInput)

}

/// Create new capture device with requested position

fileprivate func captureDevice(with position: AVCaptureDevice.Position) -> AVCaptureDevice? {

let devices = AVCaptureDevice.DiscoverySession(deviceTypes: [ .builtInWideAngleCamera, .builtInMicrophone, .builtInDualCamera, .builtInTelephotoCamera ], mediaType: AVMediaType.video, position: .unspecified).devices

//if let devices = devices {

for device in devices {

if device.position == position {

return device

}

}

//}

return nil

}

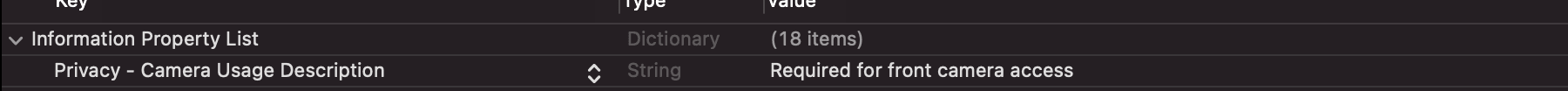

For an app to access the camera, the app must declare that it requires to use the camera in its Info.plist file. Open Info.plist and add a new entry to the property list. For key, add NSCameraUsageDescription and for value, enter Required for front camera access.

Once we have the front camera feed, now we have to display it on screen. For such a task we are going to make use of the AVCaptureVideoPreviewLayer class.

AVCaptureVideoPreviewLayer is a subclass of CALayer and it is used for displaying the camera feed. Add this as a new property to ViewController.

The property is lazy loaded as it requires CaptureSession to be loaded before it. Thus we used the lazy keyword to defer the initialization to a point where the CaptureSession would already be loaded.

private lazy var previewLayer = AVCaptureVideoPreviewLayer(session: self.captureSession)

We have to add the PreviewLayer as a sublayer of the container UIView of our ViewController. Now call this function at the end of ViewDidLoad.

private func showCameraFeed() {

self.previewLayer.videoGravity = .resizeAspectFill

self.view.layer.addSublayer(self.previewLayer)

self.previewLayer.frame = self.view.frame

}

We adapt the preview layer’s frame when the container’s view frame changes, it can potentially change at different points of the UIViewController instance lifecycle.

override func viewDidLayoutSubviews() {

super.viewDidLayoutSubviews()

self.previewLayer.frame = self.view.frame

}

The CaptureSession starts coordinating its input which provides preview, and outputs. At the end of ViewDidLoad call the following line.

self.captureSession.startRunning()

How to Detect Faces and Draw Bounding Boxes on the Face?

Let’s extract the image. For this task, we will require our CaptureSession to output each image. We will need to make use of AVCaptureVideoDataOutput. Within the ViewController class create an instance of AVCaptureVideoDataOutput.

private let videoDataOutput = AVCaptureVideoDataOutput()

Additionally, let’s call the VideoDataOutput to deliver each frame to the ViewController.

private func getCameraFrames() {

self.videoDataOutput.videoSettings = [(kCVPixelBufferPixelFormatTypeKey as NSString) : NSNumber(value: kCVPixelFormatType_32BGRA)] as [String : Any]

self.videoDataOutput.alwaysDiscardsLateVideoFrames = true

self.videoDataOutput.setSampleBufferDelegate(self, queue: DispatchQueue(label: "camera_frame_processing_queue"))

self.captureSession.addOutput(self.videoDataOutput)

guard let connection = self.videoDataOutput.connection(with: AVMediaType.video),

connection.isVideoOrientationSupported else { return }

connection.videoOrientation = .portrait

}

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

}

Now add the function to receive the frames from the CaptureSession. The CaptureOutput function receives the frames from the VideoDataOutput.

func captureOutput(

_ output: AVCaptureOutput,

didOutput sampleBuffer: CMSampleBuffer,

from connection: AVCaptureConnection) {

guard let frame = CMSampleBufferGetImageBuffer(sampleBuffer) else {

debugPrint("unable to get image from sample buffer")

return

}

print("frame")

self.detectFace(in: frame)

}

Now we will use Vision frameworks VNDetectFaceLandmarksRequest for landmark detection. To access Vision we must first import it in the file using it, at the top of ViewController.

Before the Vision framework can track an object, we should know which object is to be tracked. Determine which face is to be tracked by creating a VNImageRequestHandler and passing it a still image frame. In the case of video, submit individual frames to the request handler as they arrive in the delegate method.

import Vision

private func detectFace(in image: CVPixelBuffer) {

let faceDetectionRequest = VNDetectFaceLandmarksRequest(completionHandler: { (request: VNRequest, error: Error?) in

DispatchQueue.main.async {

if let results = request.results as? [VNFaceObservation] {

self.handleFaceDetectionResults(results)

} else {

self.clearDrawings()

}

}

})

let imageRequestHandler = VNImageRequestHandler(cvPixelBuffer: image, orientation: .leftMirrored, options: [:])

try? imageRequestHandler.perform([faceDetectionRequest])

}

The results returned contain a property named BoundingBox for each observed face. We will take each face in turn and extract the bounding box for each of those.

private var drawings: [CAShapeLayer] = []

private func handleFaceDetectionResults(_ observedFaces: [VNFaceObservation]) {

let facesBoundingBoxes: [CAShapeLayer] = observedFaces.flatMap({ (observedFace: VNFaceObservation) -> [CAShapeLayer] in

let faceBoundingBoxOnScreen = self.previewLayer.layerRectConverted(fromMetadataOutputRect: observedFace.boundingBox)

let faceBoundingBoxPath = CGPath(rect: faceBoundingBoxOnScreen, transform: nil)

let faceBoundingBoxShape = CAShapeLayer()

faceBoundingBoxShape.path = faceBoundingBoxPath

faceBoundingBoxShape.fillColor = UIColor.clear.cgColor

faceBoundingBoxShape.strokeColor = UIColor.green.cgColor

var newDrawings = [CAShapeLayer]()

newDrawings.append(faceBoundingBoxShape)

if let landmarks = observedFace.landmarks {

newDrawings = newDrawings + self.drawFaceFeatures(landmarks, screenBoundingBox: faceBoundingBoxOnScreen)

}

return newDrawings

})

facesBoundingBoxes.forEach({ faceBoundingBox in self.view.layer.addSublayer(faceBoundingBox) })

self.drawings = facesBoundingBoxes

}

private func clearDrawings() {

self.drawings.forEach({ drawing in drawing.removeFromSuperlayer() })

}

The face observation result returns a bounding box with the location of the face in the image. However, the image resolution differs from the screen resolution.

Therefore we need a conversion function. The Apple provides a conversion function on AVCaptureVideoPreviewLayer instance named layerRectConverted(fromMetadataOutputRect:) to convert from the image coordinates and screen coordinates.

Now call our new handleFaceDetectionResults from the detect face function.

private func detectFace(in image: CVPixelBuffer) {

let faceDetectionRequest = VNDetectFaceLandmarksRequest(completionHandler: { (request: VNRequest, error: Error?) in

DispatchQueue.main.async {

if let results = request.results as? [VNFaceObservation] {

self.handleFaceDetectionResults(results)

} else {

self.clearDrawings()

}

}

})

let imageRequestHandler = VNImageRequestHandler(cvPixelBuffer: image, orientation: .leftMirrored, options: [:])

try? imageRequestHandler.perform([faceDetectionRequest])

}

Below function is used for drawing features on the face:

private func drawEye(_ eye: VNFaceLandmarkRegion2D, screenBoundingBox: CGRect) -> CAShapeLayer {

let eyePath = CGMutablePath()

let eyePathPoints = eye.normalizedPoints

.map({ eyePoint in

CGPoint(

x: eyePoint.y * screenBoundingBox.height + screenBoundingBox.origin.x,

y: eyePoint.x * screenBoundingBox.width + screenBoundingBox.origin.y)

})

eyePath.addLines(between: eyePathPoints)

eyePath.closeSubpath()

let eyeDrawing = CAShapeLayer()

eyeDrawing.path = eyePath

eyeDrawing.fillColor = UIColor.clear.cgColor

eyeDrawing.strokeColor = UIColor.green.cgColor

return eyeDrawing

}

Let's draw some face features. I won’t cover all the face features available. However, what we will cover here can be applied to any face feature.

For this post, we will draw the eyes on the screen. We can access the face features path using the face detection result, VNFaceObservation property.

private func drawFaceFeatures(_ landmarks: VNFaceLandmarks2D, screenBoundingBox: CGRect) -> [CAShapeLayer] {

var faceFeaturesDrawings: [CAShapeLayer] = []

if let leftEye = landmarks.leftEye {

let eyeDrawing = self.drawEye(leftEye, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(eyeDrawing)

}

if let rightEye = landmarks.rightEye {

let eyeDrawing = self.drawEye(rightEye, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(eyeDrawing)

}

if let leftEyebrow = landmarks.leftEyebrow{

let eyeBrowDrawing = self.drawEye(leftEyebrow, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(eyeBrowDrawing)

}

if let RightEyebrow = landmarks.rightEyebrow{

let eyeBrowDrawing = self.drawEye(RightEyebrow, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(eyeBrowDrawing)

}

if let nose = landmarks.nose{

let noseDrawing = self.drawEye(nose, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(noseDrawing)

}

if let innerLip = landmarks.innerLips{

let lip = self.drawEye(innerLip, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(lip)

}

if let outerLip = landmarks.outerLips{

let lip = self.drawEye(outerLip, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(lip)

}

if let leftPupil = landmarks.leftPupil{

let pupil = self.drawEye(leftPupil, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(pupil)

}

if let RightPupil = landmarks.rightPupil{

let pupil = self.drawEye(RightPupil, screenBoundingBox: screenBoundingBox)

faceFeaturesDrawings.append(pupil)

}

return faceFeaturesDrawings

}

Note in the drawEye function we have to convert each point for the eye contour to screen points as we did for the face bounding box.

private func handleFaceDetectionResults(_ observedFaces: [VNFaceObservation]) {

self.clearDrawings()

let facesBoundingBoxes: [CAShapeLayer] = observedFaces.flatMap({ (observedFace: VNFaceObservation) -> [CAShapeLayer] in

let faceBoundingBoxOnScreen = self.previewLayer.layerRectConverted(fromMetadataOutputRect: observedFace.boundingBox)

let faceBoundingBoxPath = CGPath(rect: faceBoundingBoxOnScreen, transform: nil)

let faceBoundingBoxShape = CAShapeLayer()

faceBoundingBoxShape.path = faceBoundingBoxPath

faceBoundingBoxShape.fillColor = UIColor.clear.cgColor

faceBoundingBoxShape.strokeColor = UIColor.green.cgColor

var newDrawings = [CAShapeLayer]()

newDrawings.append(faceBoundingBoxShape)

if let landmarks = observedFace.landmarks {

newDrawings = newDrawings + self.drawFaceFeatures(landmarks, screenBoundingBox: faceBoundingBoxOnScreen)

}

return newDrawings

})

facesBoundingBoxes.forEach({ faceBoundingBox in self.view.layer.addSublayer(faceBoundingBox) })

self.drawings = facesBoundingBoxes

}

Conclusion

Face detection technology stands at the forefront of the technological revolution, influencing sectors ranging from security to marketing.

As we navigate the evolving landscape of AI, it is essential to embrace the potential of face detection while remaining vigilant about its ethical implications.

By fostering responsible innovation, we can unlock the full spectrum of benefits that this transformative technology has to offer.

It is a new high-level framework for computer vision, which is the best among all other frameworks for image processing. It obtains the highest accuracy in a very short processing time without any latency.

Features like the privacy of users’ data, consistent interface, no cost, and real-time use case make Vision even more efficient.

Result

References:

More blogs in "Software Development"

- 7th Apr, 2025

- Aishwarya Y.

Custom Software Development: The Ultimate Guide

- 19th Dec, 2024

- Aarav P.

Financial Software Development Guide for 2025

Join our Newsletter

Get insights on the latest trends in technology and industry, delivered straight to your inbox.