- 4th Sep, 2025

- Aishwarya Y.

Decoding Clustering Algorithms: A Beginner's Guide

18th Sep, 2023 | Aanya G.

- Artificial Intelligence

Finding relevant insights in today's massive sea of data can be like looking for a needle in a haystack. This is where clustering methods can help. Clustering is a strong approach for organising and uncovering hidden structures in data, which aids in making sense of complex material.

In this blog, we will delve into the intriguing realm of clustering algorithms, investigate how they function, and learn about their broad applications in a variety of industries.

What is Clustering?

Clustering is a machine learning approach that groups comparable data points together based on their intrinsic properties or similarities.

Clustering's major purpose is to partition a dataset into discrete clusters or groups, where data points within the same cluster share comparable qualities and are more similar to one another than data points in different clusters.

Clustering identifies subgroups within large datasets, ensuring that each cluster has greater internal similarity than the entire dataset.

In layman's terms, these clusters are made up of elements that are comparable but unique from those in other clusters. Clustering algorithms examine the properties of data objects and divide them into discrete groups based on their similarities.

Types of Clustering Algorithms

There are various sorts of clustering algorithms that can handle various forms of unique data. In this section, we'll explore techniques ranging from the simple K Means to the more intricate Gaussian Mixture Model and other methods.

1. K-Means Clustering

K-Means, a popular and simple clustering algorithm, works by partitioning data into K clusters, where K is a user-defined parameter.

This approach uses an iterative process to allocate each data point to the nearest cluster centroid. Following these assignments, the centroids are adjusted to the mean of the data points assigned to each cluster.

K-Means refines cluster assignments over a series of rounds, optimising the arrangement of data points to best fit the features of each cluster. This repeated technique ensures that each data point converges towards the cluster with the centroid representing its mean, resulting in well-defined and unique clusters.

2. Mini Batch K-Means Clustering

Mini Batch K-Means is a variant of the regular K-Means technique that is intended to handle larger datasets more effectively.

Instead of analysing all data points in each iteration, it updates cluster centroids in randomly selected subsets (mini-batches), making it faster while keeping reasonably accurate results.

3. Mean Shift Clustering

Mean Shift is a non-parametric clustering approach that does not require a pre-specified number of groups.

It starts with a group of data points and adjusts each one repeatedly towards the mode of the neighbouring data point density. Clusters emerge around the modes of the data distribution as points converge to dense regions.

4. Divisive Hierarchical Clustering

Divisive Hierarchical Clustering is a top-down strategy that starts with a single cluster containing all data points and then divides clusters into smaller sub-clusters recursively until each data point is a new cluster.

It generates a dendrogram, which is a binary tree-like structure that aids in visualising cluster interactions.

5. Hierarchical Agglomerative Clustering (Agglomerative Clustering)

Agglomerative Clustering, as opposed to divisive hierarchical clustering, is a bottom-up method.

It starts with each data point as a separate cluster and then merges the closest clusters iteratively until all data points belong to the same cluster. It generates a dendrogram, just like divisive clustering.

6. Gaussian Mixture Model (GMM)

GMM is a probabilistic clustering algorithm that assumes each cluster's data points have a Gaussian distribution.

The entire dataset is modelled as a mixture of several Gaussian distributions, with each component representing a cluster. To estimate the parameters of Gaussian distributions, the Expectation-Maximization (EM) technique is often utilised.

7. DBSCAN (Density-based Spatial Clustering of Applications with Noise)

DBSCAN is a clustering technique that organises data points according to their density.

It needs two parameters: epsilon, which specifies the maximum distance between points in the same cluster, and MinPts, which specifies the minimum number of points required to produce a dense zone. DBSCAN can find clusters of any shape and is resistant to outliers.

8. OPTICS (Ordering Points to Identify Clustering Structure)

OPTICS is a DBSCAN modification that solves the problem of selecting appropriate values for epsilon and MinPts. It generates a reachability plot, which gives a more comprehensive perspective of the clustering structure. The system can also detect density-based cluster hierarchies.

9. BIRCH Algorithm (Balanced Iterative Reducing and Clustering Using Hierarchies)

BIRCH is a hierarchical clustering method for large datasets. It employs a tree-like CF (Clustering Feature) structure to iteratively reduce data dimensionality and efficiently cluster data points. BIRCH is well-suited for online clustering tasks due to its ability to handle large datasets and support incremental updates.

Applications of Clustering

Clustering is a versatile data analysis approach that has transformed how we comprehend and analyse large datasets.

Clustering algorithms enable us to detect patterns, make informed decisions, and uncover hidden insights across multiple sectors by grouping similar data points together.

In this section, we will look at some of the most significant uses of clustering in several domains, ranging from market segmentation to healthcare and beyond.

1. Market Segmentation

Market segmentation is a critical component of marketing strategy. Clustering algorithms assist organisations in identifying separate groups of clients who share similar traits, preferences, and behaviours.

Companies can adjust their products, services, and marketing activities to individual demands by knowing client groups, thereby enhancing customer satisfaction and brand loyalty.

2. Retail Marketing and Sales

Clustering is critical in the retail industry for optimising marketing and sales activities. Retailers can personalise product suggestions, target promotions, and increase consumer interaction by grouping customers based on purchase behaviour, demographic data, or location.

Clustering also helps with store layout optimisation, inventory management, and demand forecasting, all of which lead to increased operational efficiency and profitability.

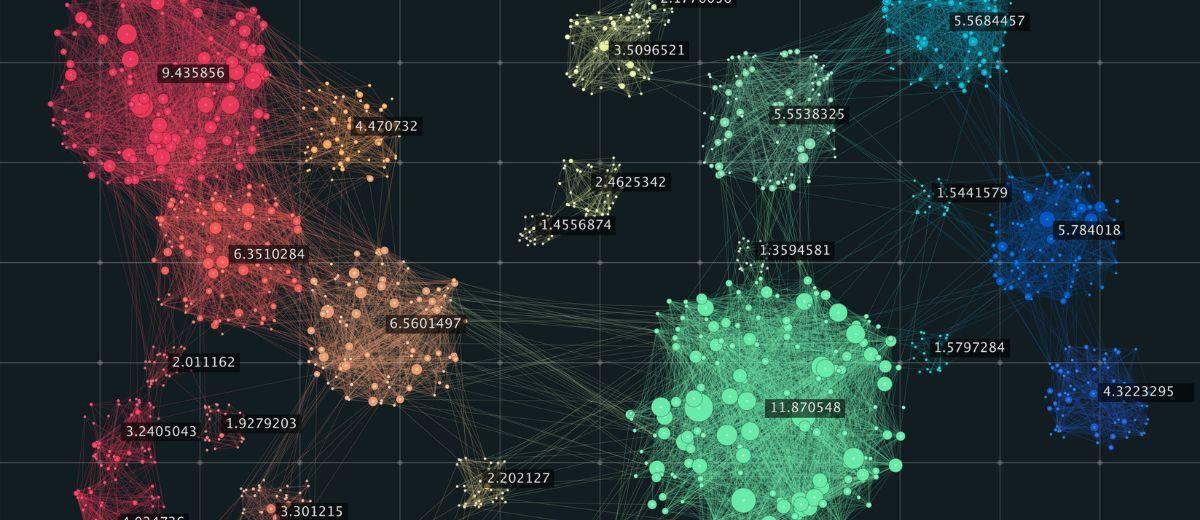

3. Social Network Analysis

The study of relationships and interactions among individuals or entities inside a network is known as social network analysis (SNA). Clustering techniques aid in the identification of communities, organisations, and significant individuals within a network.

This information is useful for targeted marketing, comprehending information flow, detecting anomalies, and combatting online fraud and cyber threats.

4. Search Result Clustering

By putting similar search results together, search result clustering improves the user's search experience. This allows visitors to more efficiently explore multiple parts of a topic and locate valuable content that could otherwise be buried in a big list of results.

Clustering also helps e-commerce systems and content-based websites organise search results, making navigating more natural.

5. Life Science and Healthcare

Clustering is utilised for a variety of applications in the life sciences and healthcare industries. Clustering is used in genomics and proteomics to detect gene expression patterns, classify disease subtypes, and locate new biomarkers.

Patient data clustering in healthcare can help with disease diagnosis, treatment planning, and personalised therapy. Electronic health record (EHR) clustering can also aid in population health management and predictive analytics.

6. Data Processing

Clustering is important in data processing activities including image and signal analysis. To extract meaningful regions from images, image segmentation includes clustering pixels with comparable features.

Clustering improves in source separation and noise reduction in signal processing. Anomaly detection uses clustering to find odd patterns in huge datasets, which is critical for fraud detection, cybersecurity, and fault diagnostics in diverse systems.

Conclusion

Unsupervised algorithms have emerged as a formidable force in machine learning and data analysis. Their capacity to find patterns, detect abnormalities, and expose underlying structures in unlabeled data makes them important in a variety of fields.

Unsupervised algorithms continue to revolutionise how we comprehend and exploit data to make educated decisions, from clustering and dimensionality reduction to association rule mining and recommender systems.

As data grows in complexity and volume, the importance of unsupervised learning in uncovering hidden insights and driving innovation is only going to expand. Adopting unsupervised algorithms enables academics, businesses, and data analysts to maximise the value of their data and stay ahead in the dynamic world of data-driven insights.

More blogs in "Artificial Intelligence"

- 29th May, 2025

- Aarav P.

AI in Genomics: Transforming Healthcare and Research

- 17th Feb, 2025

- Rinkal J.

Ultimate Guide to Healthcare E-Commerce in 2025

Join our Newsletter

Get insights on the latest trends in technology and industry, delivered straight to your inbox.